1. Setup

Here we use a 2xXeon Silver 4114 Intel CPU server with 256GB RAM and 1TB NVMe (data) and 120GB SSD (system); the software is Oracle Linux 7.6, PostgreSQL 11.1, RocksDB 5.17.2, and HammerDB 3.1.2. Known limitations

So far we have not run PgRocks sync engine on large-scale data inputs. But it is clear that a) RocksDB locks for writing critically limit the speed and b) our sync engine may not be very fast. That is why we do not expect to get high on NOPM ("new orders per minute"). Instead, we are looking forward to challenge the sync engine consistency and watch for sync errors.With the current hard and software setup, we have been able to score ~500,000 NOPM when running "pure" single-master PostgreSQL 11.1, i.e. without PgRocks plugged in. These results can be found at http://pgrocks.com/tpc/

3. Running test

a. Data preparationWe use HammerDB to create the initial tables, indexes and PostgreSQL PL/PGSQL functions necessary for the test. We select "100 warehouses" as option when creating the dataset, and "40 virtual users" option as the number of virtual CPUs. It takes about 5 minutes on our setup to get the initial data ready to begin the custom preparation.

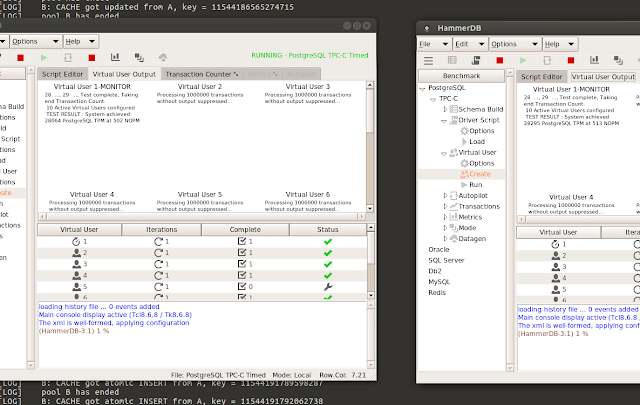

Because we are interested to calc the combined NOPM metrics, we will be syncing the "orders" table between the test nodes A and B. We will prepare the custom data on node A (localhost, port 5432), copy to node B (localhost, port 9873). Then we start PgRocks on both nodes, switch on the sync engine, and then we will run two instances of HammerDB simultaneously on two nodes:

Here is how we configure the two instances of HammerDB:

And here, step by step, how we prepare the custom data:

1. We write table orders to RocksDB. For that we use "writing.pl" script that splits the task to 16 parallel workers and creates 16 new RocksDB stores. Before we run it, we load PL/PGSQL library "lib_v3.sql". Both scripts and libraries are found in PgRocks github at https://github.com/kl3eo/PgRocks

[postgres@alex6 db_v2]$ psql tpcc < lib_v3.sql

[postgres@alex6 db_v2]$ ./writing.pl orders 5432 tpcc 3000000 1 16

[postgres@alex6 db_v2]$ du /opt/nvme/pgrocks/

[postgres@alex6 db_v2]$ ./writing.pl orders 5432 tpcc 3000000 1 16

[postgres@alex6 db_v2]$ du /opt/nvme/pgrocks/

8304 /opt/nvme/pgrocks/rocksdb_7

11108 /opt/nvme/pgrocks/rocksdb_15

10332 /opt/nvme/pgrocks/rocksdb_14

8516 /opt/nvme/pgrocks/rocksdb_6

9644 /opt/nvme/pgrocks/rocksdb_13

9652 /opt/nvme/pgrocks/rocksdb_4

11524 /opt/nvme/pgrocks/rocksdb_16

8952 /opt/nvme/pgrocks/rocksdb_5

8084 /opt/nvme/pgrocks/rocksdb_8

8520 /opt/nvme/pgrocks/rocksdb_11

11128 /opt/nvme/pgrocks/rocksdb_2

10360 /opt/nvme/pgrocks/rocksdb_3

8952 /opt/nvme/pgrocks/rocksdb_12

11436 /opt/nvme/pgrocks/rocksdb_1

8100 /opt/nvme/pgrocks/rocksdb_9

8288 /opt/nvme/pgrocks/rocksdb_10

152904 /opt/nvme/pgrocks/

tpcc=# select count(*) from orders_v3_dna;

count

---------

3000000

(1 row)

So far we've written 3 million records from orders to 16 RocksDB stores located at /opt/nvme/pgrocks/, and a new table orders_v3_dna with keys to all the RocksDB values containing rows of the original table.

2. Now we read the values from the RocksDB stores back to PostgreSQL DB "tpcc" into a new table orders_c0 which holds the rows from the old table but with new columns "key" and "mark" - the latter holds the number of the RocksDB store where the record can be found under the given "key".

[postgres@alex6 db_v2]$ ./reading.pl orders 5432 tpcc 1 1 8 && ./reading.pl orders 5432 tpcc 0 9 8 2>&1 &

( We've run consequently two 8-parallel scrips in order to bind to our resources limit.)

3. We don't need the old table so we drop it and rename the new table:

drop table orders;

alter table orders_c0 rename to orders;

alter table orders_c0 rename to orders;

4. We create a new index, change owner and replace indexes from the old table with new indexes which are unique on combination with our "key" column:

create index orders_c0_idx on orders (key,mark);

alter table orders owner to tpcc;

alter table orders_v3_dna owner to tpcc;

alter table orders add constraint orders_i1 primary key (key,o_w_id, o_d_id, o_id);

create unique index orders_i2 on orders (key,o_w_id,o_d_id,o_c_id,o_id);

alter table orders owner to tpcc;

alter table orders_v3_dna owner to tpcc;

alter table orders add constraint orders_i1 primary key (key,o_w_id, o_d_id, o_id);

create unique index orders_i2 on orders (key,o_w_id,o_d_id,o_c_id,o_id);

5. Now we have finished the data still there are changes to be done to functions used by HammerDB:

[postgres@alex6 db_v2]$ psql tpcc < neword.sql

6. Now dump the database "tpcc" then create and restore it on Node B:

[postgres@alex6 db_v2]$ pg_dump -c -o tpcc > /opt/nvme/csv/tpcc_pgrocks.out

[postgres@alex6 db_v2]$ createdb -p 9873 tpcc

[postgres@alex6 db_v2]$ psql -p 9873 tpcc < /opt/nvme/csv/tpcc_pgrocks.out

That's it with the preparation.

[postgres@alex6 db_v2]$ pg_dump -c -o tpcc > /opt/nvme/csv/tpcc_pgrocks.out

[postgres@alex6 db_v2]$ createdb -p 9873 tpcc

[postgres@alex6 db_v2]$ psql -p 9873 tpcc < /opt/nvme/csv/tpcc_pgrocks.out

That's it with the preparation.

Now we are ready to begin test and we now start the sync engine:

[postgres@alex6 db_v2]$ cat s.sh

#!/bin/bash

node server_pool_i.js >> server.log 2>&1 &

node server_pool_u.js >> server.log 2>&1 &

#!/bin/bash

node server_pool_i.js >> server.log 2>&1 &

node server_pool_u.js >> server.log 2>&1 &

We run the test for 30 minutes with 1 min ramp.

The CPUs power is used at ~ 70%:

And as we foresaw, we didn't get really high scores:

We've achieved the total of > 1000 NOPM per minute from two nodes, that is ~16 new records per second. In order to compare the TPCC result to just pushing "INSERT" as fast as possible, we run a simple script "ins.pl":

[postgres@alex6 db_v2]$ ./ins.pl > ins.log.1 2>&1 & ./ins.pl 9873 > ins.log.2 2>&1 & ./ins.pl > ins.log.3 2>&1 & ./ins.pl 9873 > ins.log.4 2>&1 & ./ins.pl > ins.log.5 2>&1 & ./ins.pl 9873 > ins.log.6 2>&1 & ./ins.pl > ins.log.7 2>&1 & ./ins.pl 9873 > ins.log.8 2>&1 & ./ins.pl > ins.log.9 2>&1 & ./ins.pl 9873 > ins.log.10 2>&1 &

When it's done, we have inserted 100,000 new records in just 513 sec (RUN#2), which is ~195 rows per second, no errors were detected in sync between nodes, that is, the tables orders on both nodes are identical and contain the same number of rows coming from each node:

RUN#1

Tini=1544250995

Tfin=1544251501

deltaT=506sec

deltaR=99998

v=198 r/s

RUN#2

RUN#1

Tini=1544250995

Tfin=1544251501

deltaT=506sec

deltaR=99998

v=198 r/s

RUN#2

Tini=1544252195

Tfin=1544252708

deltaT=513s

deltaR=100000

v=195 r/s

Tfin=1544252708

deltaT=513s

deltaR=100000

v=195 r/s

tpcc=# select to_timestamp(1544252195);

to_timestamp

------------------------

2018-12-08 09:56:35+03

(1 row)

tpcc=# select to_timestamp(1544252708);

to_timestamp

------------------------

2018-12-08 10:05:08+03

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08';

count

--------

100000

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08' and substr(key::text,0,2)::int = 1;

count

-------

50000

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08' and substr(key::text,0,2)::int = 2;

count

-------

50000

(1 row)

to_timestamp

------------------------

2018-12-08 09:56:35+03

(1 row)

tpcc=# select to_timestamp(1544252708);

to_timestamp

------------------------

2018-12-08 10:05:08+03

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08';

count

--------

100000

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08' and substr(key::text,0,2)::int = 1;

count

-------

50000

(1 row)

tpcc=# select count(*) from orders where to_timestamp(substr(key::text,2,10)::bigint) >= '2018-12-08 09:56:35' and to_timestamp(substr(key::text,2,10)::bigint) <= '2018-12-08 10:05:08' and substr(key::text,0,2)::int = 2;

count

-------

50000

(1 row)

4. RocksDB compaction style and locking

We first used RocksDB with its default "level" style of compaction and the default number of six layers. This turned out an inappropriate configuration as although we didn't have errors doing nodes sync, we couldn't get more than 50 r/s speed of inserts in the first run and with each new run the speed would decrease dramatically to mere 10 r/s after the fourth run.

After trying various options we have settled upon a variant of "universal style compaction" with just the default L0 compaction, i.e.

rocksdb_options_optimize_level_style_compaction(rocksdb_options, 0);

rocksdb_options_set_create_if_missing(rocksdb_options, createIfMissing ? 1 : 0);

rocksdb_options_set_compression(rocksdb_options, 0);

rocksdb_options_set_write_buffer_size(rocksdb_options, 64 << 20); // 64MB

rocksdb_options_set_max_open_files(rocksdb_options, 300);

rocksdb_options_set_num_levels(rocksdb_options,1);

rocksdb_options_set_keep_log_file_num(rocksdb_options,2);

rocksdb_options_set_allow_concurrent_memtable_write(rocksdb_options, 1);

rocksdb_options_set_min_write_buffer_number_to_merge(rocksdb_options,1);

rocksdb_options_set_compaction_style(rocksdb_options,1);

Selecting these options would allow us to avoid the appearance of thousands of small one-record-per-file SST files during compaction to L1, and this turned out to be the factor that made the overall speed stable and not drop with each new run.

We have a loop in our C API "rocksdb_open()" wrapper to wait for the previous writer lock to finish:

After trying various options we have settled upon a variant of "universal style compaction" with just the default L0 compaction, i.e.

rocksdb_options_optimize_level_style_compaction(rocksdb_options, 0);

rocksdb_options_set_create_if_missing(rocksdb_options, createIfMissing ? 1 : 0);

rocksdb_options_set_compression(rocksdb_options, 0);

rocksdb_options_set_write_buffer_size(rocksdb_options, 64 << 20); // 64MB

rocksdb_options_set_max_open_files(rocksdb_options, 300);

rocksdb_options_set_num_levels(rocksdb_options,1);

rocksdb_options_set_keep_log_file_num(rocksdb_options,2);

rocksdb_options_set_allow_concurrent_memtable_write(rocksdb_options, 1);

rocksdb_options_set_min_write_buffer_number_to_merge(rocksdb_options,1);

rocksdb_options_set_compaction_style(rocksdb_options,1);

Selecting these options would allow us to avoid the appearance of thousands of small one-record-per-file SST files during compaction to L1, and this turned out to be the factor that made the overall speed stable and not drop with each new run.

We have a loop in our C API "rocksdb_open()" wrapper to wait for the previous writer lock to finish:

int counter = 1000;

while (err != NULL && counter > 0) {

err = NULL;

usleep(10000);

rocksdb = rocksdb_open(rocksdb_options, name, &err);

counter--;

}

if (err != NULL) {

ereport(ERROR,

(errcode(ERRCODE_NO_DATA),

errmsg("[rocksdb], open error: %s", err)));

} else {

FILE *file = fopen("/tmp/report", "a+");

if (file)

{

fprintf(file, "%d\n", 1000-counter);

fclose(file);

}

}

err = NULL;

usleep(10000);

rocksdb = rocksdb_open(rocksdb_options, name, &err);

counter--;

}

if (err != NULL) {

ereport(ERROR,

(errcode(ERRCODE_NO_DATA),

errmsg("[rocksdb], open error: %s", err)));

} else {

FILE *file = fopen("/tmp/report", "a+");

if (file)

{

fprintf(file, "%d\n", 1000-counter);

fclose(file);

}

}

When we check the "/tmp/report" file (see example below), we see that about 30% of the records are not zero, with mean 2.88 * 10 ms ~ 29 ms. Which gives us a mean delay about 10 ms per record, while still having result speed of ~200 rows per second means the overall impact of locking must be heavy.

...

...

0

5

0

0

0

0

0

0

2

0

0

2

0

0

1

5

...

...

If we trim the code above and decrease the "usleep" argument to 5,000, we may expect some growth of the overall speed. Let's double check it and see:

RUN#3

Tini=1544267952

Tfin=1544268445

deltaT=493s

deltaR=99999

v = 203 r/s

RUN#4

Tini=1544269110

Tfin=1544269618

deltaT=508s

deltaR=100000

v = 197 r/s

Alas, there is no conclusive evidence that our assumption was correct . But we have not slowed down the speed with each new run as total rows in orders and in RocksDB were growing:

tpcc=# select count(*) from orders;

count

---------

3399997

(1 row)

[postgres@alex6 db_v2]$ du /opt/nvme/pgrocks/

10824 /opt/nvme/pgrocks/rocksdb_7

13608 /opt/nvme/pgrocks/rocksdb_15

12884 /opt/nvme/pgrocks/rocksdb_14

11060 /opt/nvme/pgrocks/rocksdb_6

12196 /opt/nvme/pgrocks/rocksdb_13

12192 /opt/nvme/pgrocks/rocksdb_4

14084 /opt/nvme/pgrocks/rocksdb_16

11496 /opt/nvme/pgrocks/rocksdb_5

10640 /opt/nvme/pgrocks/rocksdb_8

11072 /opt/nvme/pgrocks/rocksdb_11

13672 /opt/nvme/pgrocks/rocksdb_2

12920 /opt/nvme/pgrocks/rocksdb_3

11496 /opt/nvme/pgrocks/rocksdb_12

13988 /opt/nvme/pgrocks/rocksdb_1

10664 /opt/nvme/pgrocks/rocksdb_9

10824 /opt/nvme/pgrocks/rocksdb_10

193624 /opt/nvme/pgrocks/

(We here might have lost the insert of 3 rows in 4 runs due to race condition while terminating scripts, but the total number of new rows is the same on both nodes as there were no errors during the sync process).

NB: the CPUs were loaded about 35% in average during the parallel 10 runs of "ins.pl".

...

...

0

5

0

0

0

0

0

0

2

0

0

2

0

0

1

5

...

...

If we trim the code above and decrease the "usleep" argument to 5,000, we may expect some growth of the overall speed. Let's double check it and see:

RUN#3

Tini=1544267952

Tfin=1544268445

deltaT=493s

deltaR=99999

v = 203 r/s

RUN#4

Tini=1544269110

Tfin=1544269618

deltaT=508s

deltaR=100000

v = 197 r/s

Alas, there is no conclusive evidence that our assumption was correct . But we have not slowed down the speed with each new run as total rows in orders and in RocksDB were growing:

tpcc=# select count(*) from orders;

count

---------

3399997

(1 row)

[postgres@alex6 db_v2]$ du /opt/nvme/pgrocks/

10824 /opt/nvme/pgrocks/rocksdb_7

13608 /opt/nvme/pgrocks/rocksdb_15

12884 /opt/nvme/pgrocks/rocksdb_14

11060 /opt/nvme/pgrocks/rocksdb_6

12196 /opt/nvme/pgrocks/rocksdb_13

12192 /opt/nvme/pgrocks/rocksdb_4

14084 /opt/nvme/pgrocks/rocksdb_16

11496 /opt/nvme/pgrocks/rocksdb_5

10640 /opt/nvme/pgrocks/rocksdb_8

11072 /opt/nvme/pgrocks/rocksdb_11

13672 /opt/nvme/pgrocks/rocksdb_2

12920 /opt/nvme/pgrocks/rocksdb_3

11496 /opt/nvme/pgrocks/rocksdb_12

13988 /opt/nvme/pgrocks/rocksdb_1

10664 /opt/nvme/pgrocks/rocksdb_9

10824 /opt/nvme/pgrocks/rocksdb_10

193624 /opt/nvme/pgrocks/

(We here might have lost the insert of 3 rows in 4 runs due to race condition while terminating scripts, but the total number of new rows is the same on both nodes as there were no errors during the sync process).

NB: the CPUs were loaded about 35% in average during the parallel 10 runs of "ins.pl".

5. Conclusions

We have been able here to test the consistency of PgRocks multimaster mode on a model with just two nodes running on a single server, and while the overall speed was low, we have seen stable and error-free work of the sync engine put in stress of data input and update. The RocksDB locking and imperfect selection of compaction style may have been the two general contributing factors to low results of the test when compared to single-master PostgreSQL results.